A group of volunteers are making a translation online of the Lexicon of Harpocration. This has some 300 entries, and the translation is nearly complete, in fact. The project is here. The entries seem mainly about people, rather than things, whom a reader of classical literature might find difficulty in identifying.

Online collaborative translation of the Lexicon of Harpocration

Dodge that Memory Hole: Saving Digital News

Newspapers are some of the most-used collections at libraries. They have been carefully selected and preserved and represent what is often referred to as “the first draft of history.” Digitized historical newspapers provide broad and rich access to a community’s past, enabling new kinds of inquiry and research. However, these kinds of resources are at risk of being lost to future users. Networked digital technologies have changed how we communicate with each other and have rapidly changed how information is disseminated. These changes have had a drastic effect in the news industry, disrupting delivery mechanisms, upending business models and dispersing resources across the world wide web.

Current library acquisition and preservation methods for news are closely linked to the physical newspaper. Ensuring that the new modes of journalism, which are moving toward a “digital- and mobile-first” model, are captured and preserved at libraries and other memory institutions is the main goal of the Dodging the Memory Hole series of events. The first was organized in November 2014 by the Reynolds Journalism Institute at the University of Missouri. The most recent took place in May of 2015 and was organized by the Educopia Institute at the Charlotte Mecklenburg Public Library in Charlotte, NC.

I had the opportunity to close out the May meeting and highlight areas where continued work would have an impact in helping libraries collect, preserve and provide access to born-digital news. A (slightly longer but hopefully clearer) version of my talk (pdf) is below.

I want to start with a photograph from last year’s protest in Hong Kong known as the Umbrella Revolution. The picture speaks to the complexity of the problem we face in capturing and preserving the news of today. The protest was unique in that it was one of the first protests in China organized, sustained and broadcast via social media. Capturing a diverse set of materials about this news event would mean capturing the stories from established media companies and the writings and images from individual blogs and other social media. This is especially important in the case of the Umbrella Revolution because official media outlets (and social media accounts) in China are often censored. This protest was also an example of how activism in general has adapted due to networked digital technologies. Future researchers studying social and political movements happening right now would never get the whole story without access to the social media.

The role of the journalist is to get the story out and just like other publishers in the digital age, they’ve had to adapt to stay relevant. Digital storytelling is becoming more dynamic, exemplified by publications like Highline, a new long-form product from Huffington Post which is richly illustrated with audio and visual elements and is translated into a variety of languages. We can expect that in the pursuit of getting the story out and advancing story telling, news content will come from more sources, be more dynamic and continue using all kinds of formats and distribution mechanisms.

Libraries have also been transformed by digital technologies. There are a large number of digitized collections; we are creating vast and rich resources and, I think, providing great access and good stewardship to a large amount of this digitized content. Chronicling America and the Digital Public Library of America are great examples of this. However, there are gaps–or holes–in our collections, especially the born-digital content about contemporary events. Libraries haven’t broadly adopted collecting practices so that they are relevant to the current publishing environment which today is dominated by the web.

Several people at this meeting mentioned the study done by Andy Jackson (ppt) at the British Library. I have his permission to share these slides which he presented at the recent General Assembly of the International Internet Preservation Consortium. It is a simple but powerful study of ten years (2004-2014) worth of content from the UK Web Archive. It aims to find out what they have in their archive that is not on the live web anymore. He looked at a sample of URLs per year and analyzed the content to determine if the content at the URL in the archive was still at the same URL on the live web. He broke down and color coded the URLs according to a percentage scale expressing if the content was moved, changed, missing or gone. He found that after one year half of the content was either gone or had been changed so much as to be unrecognizable. After ten years almost no content still resides at its original URL. This analysis was done across all domains but you can make a logical assumption that news content wouldn’t fare any better if subjected to this same type of analysis.

Fifty percent of URLS in the UK Web Archive have lost or missing content after one year. After ten years nearly all content is moved, changed, missing or gone. Credit: Taken from a presentation given by Andy Jackson at the IIPC GA Apr 27, 2015. The full presentation available at netpreserve.org.

We have clear data that if content is not captured from the web soon after its creation, it is at risk. Which brings me to where I think our main challenge is with collecting born-digital news: library acquisition policies and practices. Libraries collect the majority of their content by buying something–a newspaper subscription, a standing order for a serial publication, a package of titles from a publisher, an access license from an aggregator, etc. The news content that’s available for purchase and printed in a newspaper is a small subset of the content that’s created and available online. Videos, interactive graphs, comments and other user-generated data are almost exclusively available online. The absence of an acquisition stream for this content puts it at risk of being lost to future library and archives users.

Establishing relationships (and eventually agreements) with the organizations that create, distribute and own news content is one of the more promising strategies for libraries to collect digital news content. Brian Hocker from KXAS-TV, an NBC affiliate in the Dallas area, shared the story of how KXAS partnered with the University of North Texas Libraries to digitize, share and ultimately preserve their station’s video archives as part of the Portal for Texas History. Jim Kroll from the Denver Public library also shared his story of acquiring the archives of the Rocky Mountain News after the newspaper ceased publication. Both stories emphasized the importance of establishing lasting relationships with decision-makers from news outlets in their respective communities. They also each created donor agreements that provided community access to the news archives which can serve as models for future agreements.

The relationships that enabled these agreements were the result of what I think of as entrepreneurial collection development in the model of acquiring special collections. The archives were pursed actively and over time, they represent a new type of content, required a new type of relationship with a donor and were a good fit–both geographically and topically–with existing collections at UNT and DPL.

Web archiving is another promising strategy to capture and preserve born-digital news. The Library of Congress recently announced its effort to save new websites, specifically those not affiliated with traditional news companies. Ben Walsh, creator of PastPages.org, announced that his service is now Memento-compliant, which will allow the archived front pages of websites from major-market newspapers that PastPages collects to be available in a Momento search. These projects will capture content at a national level, but the hyper-local news sites and citizen journalism and other niche blogs– news that used to be published as community newsletters or pamphlets–are most likely not being captured. Internet Archive’s Archive-It service is a mechanism for smaller libraries to engage in web archiving and capture some of this unique content. Capturing the social media around news events continues to be challenging but tools have been developed to capture tweets and collections of tweets around news events are being captured and shared.

The Dodging the Memory Hole events have thus far been excellent opportunities to bring librarians, archivists, the news industry and technologists together to help save news content for future generations. Look for more from this group on awareness raising, studies on what news content has already been lost, collaborations with the developers of news content management systems, and more guidance on developing donation agreements. To read more about the event, check out Trevor Owens’ report on the IMLS blog.

Palingenesia of Latin Private Rescripts. 193-305 AD: from the Accession of Pertinax to the Abdication of Diocletian

Editor's Note—The palingenesia presented on these pages was prepared by Professor Honoré to accompany his Emperors and Lawyers, 2nd ed. (Oxford: Oxford University Press, 1994), ISBN 0-19-825769-4. It is reproduced here by the kind permission of the author and of the Oxford University Press. Those who consult the palingenesia are asked to observe all appropriate copyright restrictions.* * *The Palingenesia lists private imperial rescripts in Latin between 193 and 305 AD. General information about its character is given in the preface. Its core is a comprehensive collection, arranged chronologically, of Latin rescripts to private petitioners on points of law (ad ius). These number 2,609, though as explained in the preface the number of texts listed is somewhat greater, because the compilers of legal codes and other collections have sometimes split a rescript into two or three parts. Some documents that are not private rescripts but that occur in well-known legal sources have also been included. The rescripts have been drawn from a number of sources, including Justinian's Codex (CJ), Digest (D) and Institutes (J Inst) and other ancient collections such as Vatican Fragments (FV), Collatio legum Mosaicarum et Romanarum (Collatio), Consultatio veteris iurisconsulti (Cons), the Visigothic summary of the Codex Gregorianus and Hermogenianus (CG Visi, CH Visi) the Appendices to Lex Romana Visigothorum (Appx LR Visi), and the modern Collectio Librorum Iuris Anteiustiniani (Collectio) together with those found on inscriptions and papyri. All the constitutions listed are taken to be rescripts on points of law in answer to petitions by private individuals (i.e. what were at one time called subscriptiones) except those that are specifically marked as letters to officials or prominent people (epistulae) or other types of imperial law, such as edicts (edicta), final or interlocutory judgments (sententiae), and oral pronouncements made out of court (interlocutiones de plano). In principle these other types of constitution should not be included in the Palingenesia, but exceptions have been made when they appear in well-known legal sources such as CJ or Vatican Fragments, so that their omission might cause puzzlement. Rescripts on matters other than law, such as the granting or refusal of concessions or privileges, are likewise in principle excluded; so are those of which we have only a text in Greek (with the exception of three from Justinian's Codex and Digest), and those that cannot be dated to the period 193-305. The same holds of impromptu answers to petitioners of the sort collected in the Apokrimata of Septimius Severus, which were not referred to the secretary for petitions (procurator a libellis, magister libellorum) to draft a reply on a point of law and so cannot be relied on as evidence for the secretary's style.

Open Access Journal: Dotawo: A Journal of Nubian Studies

Dotawo: A Journal of Nubian Studies

Nubian studies needs a platform in which the old meets the new, in which archaeological, papyrological, and philological research into Meroitic, Old Nubian, Coptic, Greek, and Arabic sources confront current investigations in modern anthropology and ethnography, Nilo-Saharan linguistics, and critical and theoretical approaches present in postcolonial and African studies.

The journal Dotawo: A Journal of Nubian Studies brings these disparate fields together within the same fold, opening a cross-cultural and diachronic field where divergent approaches meet on common soil. Dotawo gives a common home to the past, present, and future of one of the richest areas of research in African studies. It offers a crossroads where papyrus can meet internet, scribes meet critical thinkers, and the promises of growing nations meet the accomplishments of old kingdoms.

We embrace a powerful alternative to the dominant paradigms of academic publishing. We believe in free access to information. Accordingly, we are proud to collaborate with DigitalCommons@Fairfield, an institutional repository of Fairfield University in Connecticut, USA, and with open-access publishing house punctum books. Thanks to these collaborations, every volume of Dotawo will be available both as a free online pdf and in online bookstores.

Current Volume: Volume 2 (2015)

From the Editors

We are proud to present the second volume of Dotawo: A Journal of Nubian Studies. This journal offers a multi-disciplinary, diachronic view of all aspects of Nubian civilization. It brings to Nubian studies a new approach to scholarly knowledge: an open-access collaboration with DigitalCommons@Fairfield, an institutional repository of Fairfield University in Connecticut, usa, and publishing house punctum books.

The first two volumes of Dotawo have their origins in a Nubian language panel organized by Angelika Jakobi within the Nilo-Saharan Linguistics Colloquium held at the University of Cologne, May 22 to 24, 2013. Since many invited participants from Sudan were unable to get visas due to the shutdown of the German Embassy in Khartoum at that time, the Fritz Thyssen Foundation funded the organization of a second venue of specialists on modern Nubian languages. This so-called “Nubian Panel 2” was hosted by the Institute of African & Asian Studies at the University of Khartoum on September 18 and 19, 2013. This volume publishes the proceedings of that panel. We wish to extend our thanks both to the Fritz Thyssen Foundation and to Professor Abdelrahim Hamid Mugaddam, the then director of the Institute of African & Asian Studies, for their generous support.

Future volumes will address three more themes: 1) Nubian women; 2) Nubian place names; 3) and know-how and techniques in ancient Sudan. The calls for papers for the first two volumes may be found on the back of this volume. The third volume is already in preparation with the assistance of Marc Maillot of the Section française de la direction des antiquités du Soudan (sfdas), Department of Archeology. We welcome proposals for additional themed volumes, and invite individual submissions on any topic relevant to Nubian studies.Articles

Old Nubian Relative Clauses

Vincent van Gerven OeiThe Verbal Plural Marker in Nobiin (Nile Nubian)

Mohamed K. KhalilRelative Clauses in Andaandi (Nile Nubian)

Angelika Jakobi and El-Shafie El-GuzuuliThe Uses and Orthography of the Verb “Say” in Andaandi (Nile Nubian)

El-Shafie El-GuzuuliFocus Constructions in Kunuz Nubian

Ahmed-Sokarno Abdel-HafizThe Consonant System of Abu Jinuk (Kordofan Nubian)

Waleed AlshareefPossessor Ascension in Taglennaa (Kordofan Nubian)

Gumma Ibrahim GulfanAttributive Modifiers in Taglennaa (Kordofan Nubian)

Ali Ibrahim and Angelika JakobiKadaru-Kurtala Phonemes

Thomas Kuku Alaki and Russell NortonTabaq Kinship Terms

Khaleel IsmailAn Initial Report on Tabaq Knowledge and Proficiency

Khalifa Jabreldar KhalifaNumber Marking on Karko Nouns

Angelika Jakobi and Ahmed Hamdan

Volume 1 (2014)

And see AWOL's Roundup: Open Access Ancient Nubia and Sudan

Digital Humanities Quarterly CFP

Existing digital tools and related models carry assumptions of knowledge as primarily visual, thus neglecting other sensory or experiential detail and sustaining traditional and often ocularcentric humanities research (Howes, 2005, p. 14; Classen, 1997, pp. 401-12). The excuse is that intangible artefacts, such as senses, movement or emotions leave no traces or evidence, so we cannot reproduce them in their entirety (see Betts 2016 and Foka 2016). While we argue that the lack of evidence is in fact present in any aspect of historical research, we wish to add to related criticisms of knowledge production by challenging current digital research that sustains the past as sanitised historio-cultural ideal (Westin, 2012; Tziovas, 2014). Positing that novel technological methods and tools may help to combat this view, and give us a further insight into historically situated life, this special issue aims to examine the question of whether and how digital technology may facilitate a different and deeper understanding of historically situated life as a sensory and emotional experience. This special issue currently planned for the Digital Humanities Quarterly will contribute to the sensory turn in archaeology and historical research by demonstrating the potential of digital humanities to mobilize a deeper understanding of the past. By discussing the possibilities and problems of (a) digital recreation(s) of narratives and monuments, we further aim to address ways of conveying digital ekphrasis (Lindhé, 2013). Similar to the rhetorical device of ekphrasis, which may be used to describe any experience, digital technology can be a means through which to recreate, experience and study the past in a way that challenges prescribed notions of it.

We are looking for contributions that deal with (but are not limited to)

1. tangible and sensory technologies for the study of the past

2. Technological advancements in the study of archaeological fieldwork

3. Ways to digitally convey and research narratives

4. Gaming engines

5. immersive screens, kinect and other platforms for the study and display of history.

We are envisioning the first drafts to be handed in to us for peer review around December 2015 and about 4 more months for corrections making the publication available around early summer 2016.

Please submit your 300 word abstract no later than the 15th of June at

anna.foka@umu.se

Very much looking forward to receiving your contributions!

The ctp2015 team

http://www.challengethepast.com

Harpokration On Line

The Duke Collaboratory for Classics Computing (DC3) is pleased to announce the Harpokration On Line project, which aims to provide open-licensed collaboratively-sourced translation(s) for Harpokration’s “Lexicon of the Ten Orators”.

Users can view and contribute translations at http://dcthree.github.io/harpokration/ or download the project data from GitHub. Detailed instructions for contributing translations can also be found in the announcement blog post.

The project (and name) draw inspiration from the Stoa-hosted Suda On Line project.

The code used to run the project is openly available at https://github.com/dcthree/harpokration. The project also leverages the existing CTS/CITE architecture. This architecture, pioneered for other Digital Classics projects, allows us to build on well-developed concepts for organizing texts and translations—concepts which are transformable to other standards such as OAC and RDF. Driving a translation project using CITE annotations against passages of a “canonical” CTS text seemed a natural fit. Using Google Fusion Tables, Google authentication, and client-side JavaScript for the core of our current implementation has also allowed us to rapidly develop relatively lightweight mechanisms for contributing, using freely-available hosting and tools (GitHub Pages, Google App Engine) for the initial phases of the project.

The DC3 is excited to see where this project leads, and hopes to also lead by example in publishing this project using open tools under an open license, with openly-licensed contributions.

Survey Archaeology and Forms

Anyone who has done archaeology lately knows that we almost spend as much time looking at form (or its digital equivalent) as the trench, survey unit, landscape, or architectural feature. In general, forms are unattractive and at best functional (at worst, they are overwhelming belches of blank lines, boxes, and cryptic instructions.

Tomorrow the 2015 Western Argolid Regional Project season starts. We had a few little tweaks to make to the database and that led to some tweaking of the form and that led to some modifications in its appearance.

I’m sure I’m violating several laws of graphic design in my efforts, but I think I’ve improved our forms legibility and added a bit of style. The font is Prime; it’s a free, sans serif, highly geometric font which adds some bling without encroaching too much on the utility of the form.

I also tried to standardize the placement of boxes. Almost all archaeological forms that I’ve encountered try to do too much in too little space. For WARP, we want to keep the form to a front and back page. So I tried to find ways to negotiate the constrained space of the form so that it was a little bit easier to follow and I tried to play a bit with orientation by extending some things to the right of the margin and some boxes to the left (in an orderly way) to index the form a bit and to give some more room for the free text boxes.

Open Access Library: Theological Commons

The Theological Commons is a digital library of over 80,000 resources on theology and religion. It consists mainly of public domain books but also includes periodicals, audio recordings, and other formats.

Please see the Acknowledgments page for information on our partners and sponsors.

Selection of materials for inclusion in the Theological Commons is guided by the Library’s Collection Development Policy. Additionally, due to legal restrictions on copyrighted works, the Theological Commons only includes materials that are out of copyright, or for which special permission has been obtained from the copyright holder. Typically, works published before 1923 are unambiguously out of copyright, and such works constitute the vast majority of content in the Theological Commons.

Open Access Journal: Journal of Textual Reasoning

The Journal of Textual Reasoning is the main publishing expression of the Society of Textual Reasoning, which sponsors an electronic list-serve [textualreasoning@list.mail.virginia.edu] and meetings at professional academic conferences. The Journal will publish essays in the exegetical analyses of Jewish texts and the practice of textual reasoning as well as statements in the on-going development of the theory of Textual Reasoning. The Journal will generally follow a particular theme in each issue and include reviews of books relevant to Textual Reasoning. In the traditions of rabbinic thought and dialogical philosophy, we aim to present individual articles along with commentaries to them. To subscribe or to check on your options, write to textualreasoning-request@list.mail.virginia.edu.

Volume 8, Number 1: Narrative, Textuality, and the Other

General Editors Peter OchsSteven KepnesIssue Editors Peter Ochs Ashley TateManaging Editor Ashley Tate

Introduction

Peter Ochs, University of Virginia

Part I: Language, Identity, and Textuality

The Teshuvah of Jacques Derrida: Judaism Hors-texte Emilie Kutash, St. Joseph’s College

The Plot within the Piyyut: Retelling the Story of Balak on the Liturgical Stage Laura Lieber, Duke University

Part II: Textual Reasonings for a “Vav” and a “Na”

About a Vav: Arguments for Changing the Nusach Masorti Regarding Hanukkah Bernhard Rohrbacher

The Binding of Isaac as a Trickster Narrative: And God Said “Na” Eugene F. Rogers, Jr, University of North Carolina at Greensboro

Part III: Reading Texts With and Against the Other

Inter-religious Dialogue and Debate: Ibn Kammuna’s Cultural Model Abdulrahman Al-Salimi, al-Tafahom Journal, Sultanate of Oman

Textual Reasoning as Constructive Conflict: A Reading of Talmud Bavli Hagigah 7a Jonathan Kelsen, Drisha Institute

Volume 7, Number 1 (March 2012): Autonomy, Community, and the Jewish Self

Volume 6, Number 2 (March 2011): The Female Ruse: Women’s Subversive Voices in Biblical and Rabbinic Texts

Volume 6, Number 1 (December 2010): Halakhah and Morality

Volume 5, Number 1 (December 2007): Prayer and Otherness

Volume 4, Number 3 (May 2006): Jewish Sensibilities

Volume 4, Number 2 (March 2006): Rational Rabbis

Volume 4, Number 1 (November 2005): The Ethics of the Neighbor

Volume 3, Number 1 (June 2004): Strauss and Textual Reasoning

Volume 2, Number 1 (June 2003): The Aqedah: Midrash as Visualization

Volume 1, Number 1 (2002): Why Textual Reasoning?

Old Series

The Journal of Textual Reasoning evolved from “The Postmodern Jewish Philosophy Bitnetwork,” a collaborative project begun in 1991. An archive of these correspondences and early iterations of the journal may be found here. The year 2002 marked the official transition to the Journal of Textual Reasoning, whose publications are listed above.

Installing Minecraft Forge and ComputerCraft

The occasion for writing this is the North Dallas .NET Users Group's first kids night, where MinecraftU will be teaching the kids (and adults who stay for it) about creating Minecraft mods with ComputerCraft. Hopefully others will find it useful too.

Note:These instructions are relevant for Minecraft 1.8 (and probably below), Minecraft Forge 1.7.10, ComputerCraft 1.73 and Windows 8.1 near the date of June 2nd, 2015. Otherwise YMMV.

The goal is to explain as best I can (I have limited experience with mods and Minecraft) the following:

- Show you how the file system for Minecraft works in relation to mods.

- How to install Minecraft Forge

- How to install ComputerCraft

I'll also explain my hangups along the way, just in case you run into the same issues. Based on various bits on the internet, these errors are apparently fairly common.

The Minecraft Mod and File System

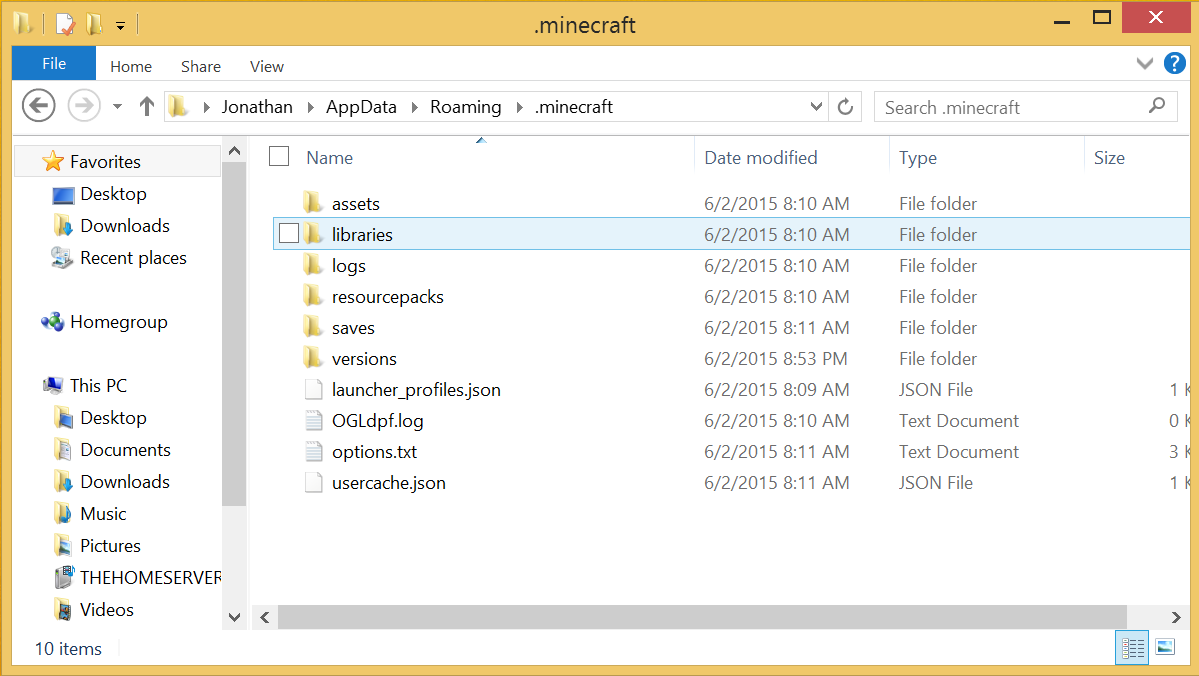

Mods often don't come with installers so you need to know how to navigate the file system to do what needs to be done. After installing Minecraft on my Windows 8.1 machine and running it at least once, I get the following on my hard drive:

Navigate there in Windows Explorer or go to the Run command (right-click on the Windows icon in the taskbar then select Run) and enter %appdata%/.minecraft.

There are three things there that I know something about. First is the saves folder. This is where you will find the saved worlds that are created every time you start a new world in Minecraft. Each world gets its own folder. To transfer worlds from one computer to another, you move the folder from here and copy it to another computer in the same place.

Next is the versions folder. We ran Minecraft for the first time on this particular computer today and it is on version 1.8.6, so inside the versions folder, I have a subfolder named 1.8.6. You will see why this is important in a moment.

The third folder is one that you don't see in the screenshot above and probably won't exist unless you have already created it, and this is the mods folder. If you don't yet have this folder, create it there in the root of the .minecraft folder (so you should see it between logs and resourcepacks if it is all in alphabetical order)

Step one is complete.

Minecraft Forge

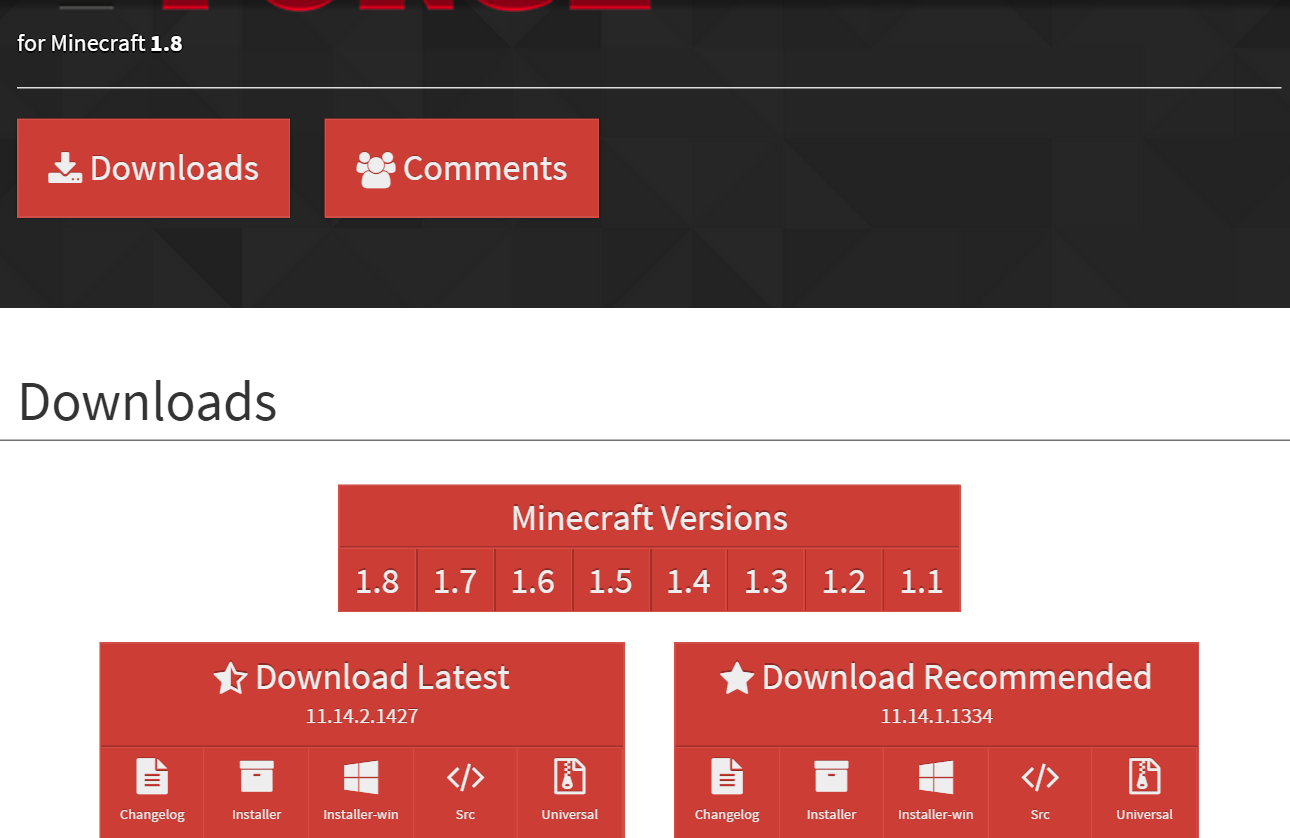

To use mods in Minecraft, you need something to bootstrap the mods and load them. Minecraft Forge is one way of doing that and is the way that ComputerCraft gets loaded, so we need to use it. So you go to the Minecraft Forge home page and it will show you downloads for Minecraft Forge. As of when this was written, it looks like this:

Click on the appropriate installer. For me at the moment it is installer-win under the recommended downloads.

Excursus: Versioning

So you might be thinking, "Gee, I'm on Minecraft 1.8.6 so I should download that!" That is entirely reasonable thinking but is in fact not always what you need. As a general rule, especially unless stated otherwise, assume all version numbers are exact and newer versions aren't necessarily compatible with that version's mods and older.

So in other words, if you find a Mod on the Internet that is supposed to work with Minecraft Forge 1.7, don't assume that it will work with 1.8 because it is higher. In my experience this is not the case. This means that the version of Forge you use (and you can have multiple versions installed, so that is good), will depend on the versions supported by the Mod.

End Excursus

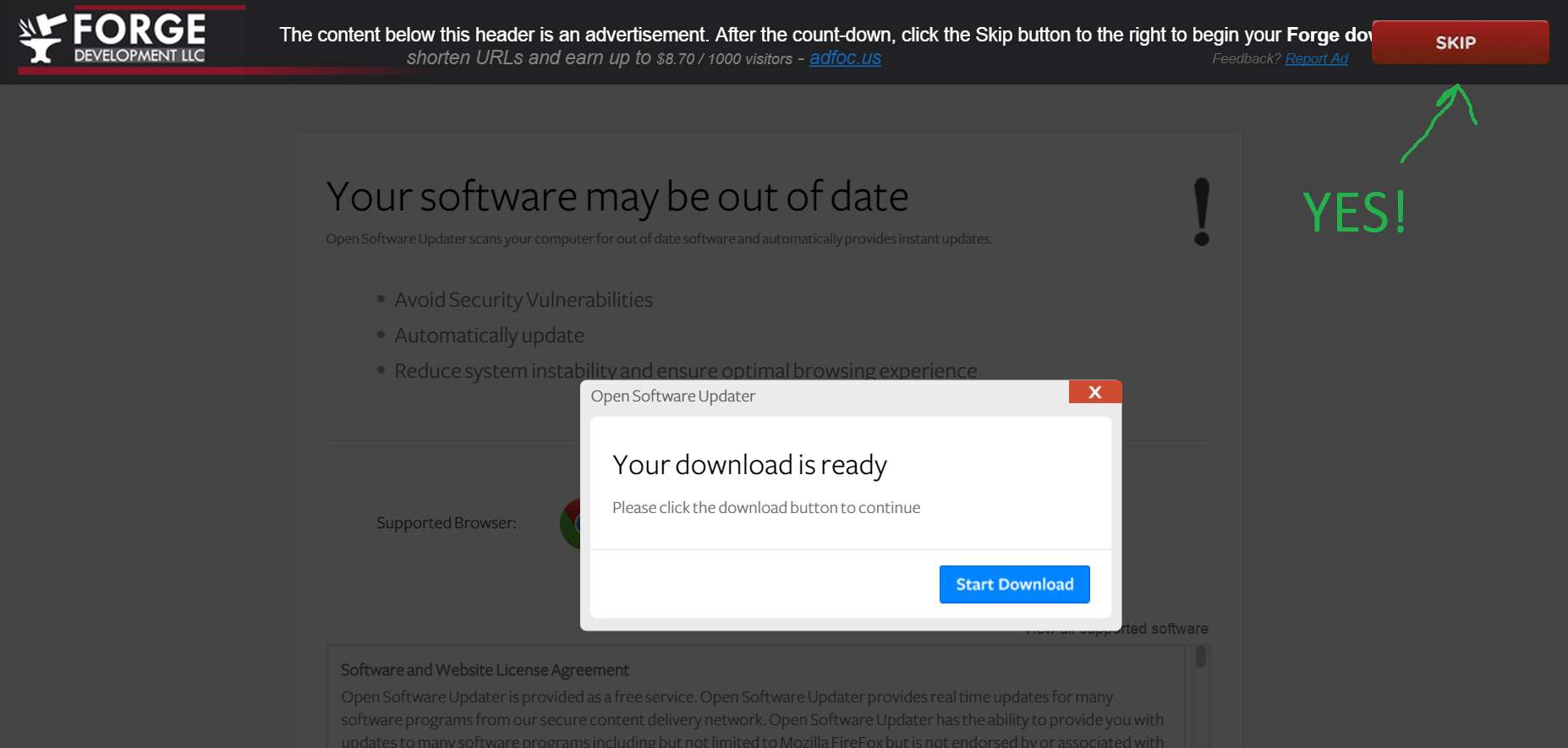

Jumping ahead, the ComputerCraft website says it works with version 1.7.10 of Minecraft Forge, so we want to install version 1.7.10. You will see the following. DON'T DO ANYTHING.

That's right, this page desperately wants you to install adware. So sad. Wait a few seconds until you see the following:

You should download a file that has a name something like this: forge-1.7.10-10.13.2.1291-installer-win.exe. Run it. You should see this.

I had a version of Java installed but it apparently wasn't good enough for the Forge installer, so I had to download the latest from Oracle. You may need to do so as well.

Also, you might end up seeing an error that looks like this:

The first time I went through this installation process, I did this for 1.8 because I didn't know about the versioning restrictions, so instead of "You need to run the version 1.7.10 manually at least once", I got "You need to run the version 1.8 manually at least once". This can happen for at least three reasons (I managed to run into all three...sad, I know).

First, you might get this error because you had never run Minecraft yet for that account. Running Minecraft once for a particular version will create a folder for that version in the versions folder we discussed above. So if you haven't done so, run Minecraft.

Second, you might get the error because you are installing this for another user of the computer other than the admin. If you look at the screen shot of the installer, you'll see Eric in the path. That's me. But I'm actually trying to get this running for my son's (Jonathan) user account, so even if Jonathan had run version 1.7.10, it wouldn't matter because it is looking in my folder. That is bad. So if you are installing for another user, change the path to have their name instead.

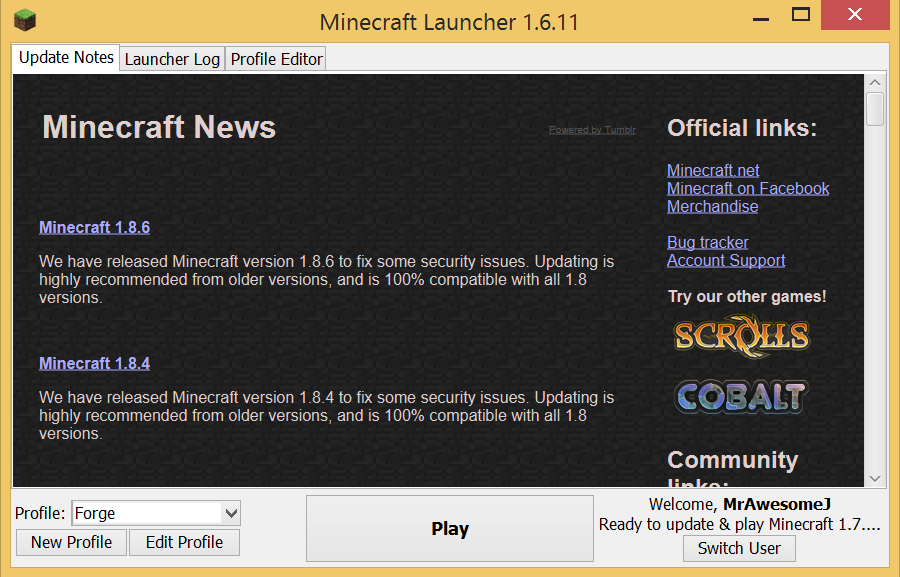

Third, you might get the error because you ran a version similar to what you are installing but not exactly what you are installing. For example, I was running 1.8.6 of Minecraft so I installed the 1.8 version of Minecraft Forge. I assumed since there wasn't a 1.8.6 version of Forge, it would work. Nope. I had to actually downgrade to 1.8.0 before I got the proper version directory. Sigh. Anyway, to do this, start the Minecraft launcher. You'll see something like this.

Click the Edit Profile button in the bottom-left-hand corner. In there, look for the label Use version:. Unless you have changed it, the selection probably reads something like "Use Latest Version". Change that to the version you need (in our case 1.7.10) and hit the Save Profile button. Then hit the Play button that you normally start Minecraft with. When you hit play, it will download the needed Minecraft files into the version folder that you need. Next, go back to the profile and change it back to "Use Latest Version". Forge will create a new profile for you in a bit.

Assuming we are now past all of these errors or those like them, we can run the installer and it will work.

Yay! Two things should have happened. First, in your versions folder you should have a new subfolder for Minecraft Forge, which in my case is 1.7.10-Forge10.13.2.1291. Second, if you restart Minecraft, you should have a new profile. It will probably look like this:

If you use this new profile, when Minecraft is loaded, Forge should load any mods that you have in the mods directory (that I told you to create earlier). If you switch back to the other profile, you'll get the latest-and-greatest version without mods. No, you don't get to have your cake and eat it too.

Before we move on, I would recommend renaming the Forge profile to something else, like "Forge 1.7.10." This is because you may need to install a newer version of Forge later, and when I did that last time it copied the new configuration over the old, which was annoying. So if you rename the Forge profile and install another version, it will create a new profile with the name "Forge", which you would then want to rename according to the actual version, and so on.

Okay, so that was a little tiring. We aren't done yet but we're close! We have to install the actual mod we want to use, not just the loader.

Installing ComputerCraft

Okay, go to the ComputerCraft download page and hit the download link. As of typing this, that link read "Download ComputerCraft 1.73 (for Minecraft 1.7.10)". Note those version numbers. This is why we got 1.7.10 of Minecraft up and running earlier. That should download a file with this name: ComputerCraft1.73.jar. That is a Java jar file and is the plugin. Take that file, copy it, and past it into the mods folder we discussed earlier. If this is your first mod, this is probably the first file in this folder.

Start Minecraft using the Forge profile we just created. The startup screen should have some different stuff on it. This is what mine looks like:

If you hit that Mods button, you will hopefully see the ComputerCraft mod loaded like you see below.

At this point we are theoretically ready to use ComputerCraft. I won't show you how to do that here. This is just about getting you started. I hope you found this useful!

Open Access Book: 11th International Conference on Greek Linguistics (Rhodes, 26-29 September 2013): Selected Papers

Corso di Gis Open Source per i Beni Culturali di Primo Livello

Fino 20 Giugno sono aperte le iscrizioni ad un nuovo corso di Primo livello in Gis Opensource per i Beni Culturali e la gestione del Territorio organizzati dall'Associazione Una_Quantum inc che si terranno a Roma tra Giugno e Luglio 2015.

New Open Access Journal: Thersites: Journal for Transcultural Presences & Diachronic Identities from Antiquity to Date

ISSN: 2364-7612

thersites is an international open access journal for innovative transdisciplinary classical studies founded in 2014 by Christine Walde, Filippo Carlà and Christian Stoffel.

- thersites expands classical reception studies by reflecting on Greco-Roman antiquity as present phenomenon and diachronic culture that is part of today’s transcultural and highly diverse world. Antiquity, in our understanding, does not merely belong to the past, but is always experienced and engaged in the present.

- thersites contributes to the critical review on methods, theories, approaches and subjects in classical scholarship, which currently seems to be awkwardly divided between traditional perspectives and cultural turns.

- thersites brings together scholars, writers, essayists, artists and all kinds of agents in the culture industry to get a better understanding of how antiquity constitutes a part of today’s culture and (trans-)forms our present.

Bd. 1 (2015): Caesar's Salad: Antikerezeption im 20. und 21. Jahrhundert

Inhaltsverzeichnis

Prolegomena

Article

ConservareperRicordare: Ciclo di nove conferenze MiBACT per EXPO 2015

#ConservareperRicordare è un ciclo di nove incontri sull’eccellenza italiana nell’ambito della cultura, organizzato dal Ministero dei Beni e delle Attività Culturali e del Turismo nell’ambito di Expo 2015. Il Ministero intende offrire uno scorcio su quelle competenze in materia di conservazione e protezione del nostro patrimonio culturale, unico al mondo e che costituisce il cuore della nostra identità nazionale.

XVIII Borsa Mediterranea del Turismo Archeologico

La XVIII Edizione della Borsa Mediterranea del Turismo Archeologico di Paestum è in programma dal 29 ottobre al 1 novembre 2015 presso il prestigioso sito archeologico campano. La Borsa è sede dell’unico Salone espositivo al mondo del patrimonio archeologico e di ArcheoVirtual, l’innovativa mostra internazionale di tecnologie multimediali, interattive e virtuali; luogo di approfondimento e divulgazione di temi dedicati al turismo culturale ed al patrimonio; occasione di incontro per gli addetti ai lavori, per gli operatori turistici e culturali, per i viaggiatori, per gli appassionati; opportunità di business nella suggestiva location del Museo Archeologico con il Workshop tra la domanda estera e l’offerta del turismo culturale ed archeologico.

VII Tavola Rotonda sulla policromia nella scultura antica e in architettura

Dal 4 al 6 Novembre 2015, si terrà a Firenze la 7th Round Table on Polychromy in Ancient Sculpture and Architecture. L'incontro, ospitato dalla Galleria degli Uffizi nella Sala di San Pier Scheraggio, è organizzato in collaborazione con il Dipartimento SAGAS dell’Università di Firenze, l’Istituto per la Conservazione e Valorizzazione dei Beni Culturali del CNR di Firenze e la Soprintendenza Archeologia della Toscana.

130 tirocini per giovani in progetti di tutela di biblioteche, musei e archivi

Il MiBACT ha annunciato la la riattivazione dei tirocini del Fondo “1000 giovani per la cultura” anche per l’anno 2015. Dopo la positiva esperienza dello scorso anno, continua il programma di formazione per giovani fino a 29 anni di età nel settore dei beni e delle attività culturali previsto dal decreto legge n. 76 del 2013 e confermato dal decreto legge “Art-bonus” n. 83 del 2014. Anche per il 2015, il decreto prevede 130 tirocini formativi nell’ambito di progetti di rilevante interesse per la tutela e la valorizzazione del patrimonio culturale della Nazione.

Front-End Performance with Vox Media

By Daniel Bachhuber, Erin Kissane

(CC BY-ND 2.0 tambako via Flickr)

Vox Media recently declared “performance bankruptcy”—and with Facebook is calling out news organizations for eight-second load times, the time is ripe for a pragmatic discussion of issues and approaches in front-end performance optimization.

On Wednesday, May 20th, the newly launched News Nerdery Slack group hosted a casual chat about website performance with Dan Chilton, Vox Media's director of engineering, and Vox performance engineers Jason Ormand and Ian Carrico, with moderation by Fusion's director of engineering, Daniel Bachhuber.

The following is an edited and condensed summary of what they discussed. Coders and designers with an interest in journalism tech are welcome to join the group by contacting the group's founder, Bachhuber. If you're coming to SRCCON 2015, the group will also be gathering there and happy to get you signed up. (Members can read the full chat in Slack archives.)

Front-End Performance 101

Q. I'm new to front-end performance. What should I look for, and how should I look for it?

Jason Ormand: Page load time, specifically to get the page load time as low as possible. Two tools you can use are Chrome’s Dev tools network tab and Web Page Test. You should also track page weight (e.g. size in MB) and the number of HTTP requests.

Ian Carrico: Page load time isn’t the time to load everything, though […] Paul Irish just had a great talk at FluentConf about thinking about performance as his “RAIL” idea.

Gabriel Luethje: Filament Group does a great job of illustrating it too.

Q. What’s the single most challenging aspect to front-end performance?

Ormond: Since Vox has an ad-based revenue model … it’s ad performance. Many advertisers and vendor tools have the expectation of being loaded synchronously. We are working to change that (to all async) but it’s a very difficult task with many considerations. Ensuring a web asset makes performance sense is relatively straight forward. But ensuring performance goals align with business goals can be very tricky business.

Carrico: Also, legacy code. There was a time on the web where Vox wrote JavaScript synchronously. Where we used tables, and didn’t use floats. But much like the change to RWD required a change in thinking about how we write code, a change to a focus on performance will require the same. And just like RWD requires altering how we design, how Vox thinks about projects, how we test our sites: Performance requires changing/challenging current perceptions on the development cycle and how we design/build web experiences.

Chilton: A large component of Vox’s job is to educate the rest of the team (designers, developers, editorial) about the potential performance implications of their decisions. We believe very strongly that performance should be everyone’s concern, not just the purview of our small team.

Daniel Bachhuber: Two more cents: 1) knowing where to begin with optimizations, and 2) making sure you don’t take one step forward during a performance sprint, and two steps back as a part of your normal product development. Fusion had a “performance week” a month and a half ago. We captured lessons learned on our blog. As a part of the process, we set up Speedcurve to monitor site performance. However, we haven’t successfully adopted it.

Chilton: Vox has an internal tool called Tempo and is also running Speedcurve.

Q. What’s one front-end performance trick you wish you had learned at the very beginning?

Carrico: The trick is that there are no tricks. It’s all about where you get your information. There are a lot of people out there who are doing great work, writing posts, etc. Finding those people, reading their things, and trying to implement is more useful than anything.

Ormond: To get started, you should investigate and measure. Form a hypothesis. Make a change with as limited scope as possible. Measure again. Invalidate/validate hypothesis. If the data checks out, push to production. If not, wash rinse repeat.

Chilton: It was learning to dig deeper into the dev tools. Some recommended videos:

Ryan Murphy: Giving things a pass with Google Pagespeed is crazy illuminating.

Common Issues in Front-End Performance

Q. What’s a front-end performance issue you’re currently working on?

Carrico: Asynchronous JavaScript code. This isn’t a “quick fix”, nor something we expect to get out the door tomorrow—but certainly something worth focusing on. Vox is working with several team to get a better way of running all of our JavaScript in a unified fashion, and getting legacy code up to speed in the process.

Ormond: SPDY/HTTP2.0 are coming to static assets at Vox pretty soon, which is exciting. Async ads are also on the roadmap, as is evolving performance tools around metrics tracking and workflow automation.

Carrico: I’ve been working on loading our fonts with LoadCSS and using Font Face Observer to ensure Vox don’t get any FOIT. So far, we have had great results. Jonathan Suh has a great post.

Q. Regarding LoadCSS and inlining critical CSS, have you found that challenging to work into CMS workflows?

Carrico: A little: When I was at Four Kitchens, we used it in Drupal, and just did some manual running / testing to ensure the output was right. But we got it to work fairly easily just by committing our critical CSS to git and having Drupal load it inline. The critical inline CSS was broken apart based on different template files (e.g. home vs. article).

Chilton: Web Page Test is an invaluable tool, and Vox is leveraging it internally with our Tempo app.

Q: Have you run into any push-back over the Flash of Unstyled Content?

Carrico: Not yet! But I did work heavily with designers to make sure they had some reasonable fallback fonts. The FoUT is not FoUT as much as Flash of Less Styled Text

Q. Has anyone found lazy-loading images to be a big performance booster on news sites?

Ormond: Vox uses JS client side to load images async and hopefully as close to the perfect size as possible in most places. Async image loading is a big win. Next up, support for Webp to save bandwidth and async load times.

Q: For your lazyloaded images, what happens when JavaScript on the page breaks? We (Fusion) had lazyload in production for a while, but were running into a variety of implementation issues that caused the general quality/reliability of images on our site to be subpar.

Carrico: Don’t let JavaScript break. But really, there should be some good testing in to make sure broken JS isn’t added, and more importantly error handling. Four Kitchens ran into this issue with some Drupal code, where one small piece would break, and everything else would. We ended up implementing more solids tests to check for that.

Q: How does Vox fit refactors into the product backlog?

Chilton: It depends on the scope of the refactor. We are constantly pushing and iterating Chorus with small refactors, but large ones (like making The Verge fully responsive) is approached more like a new vertical. In that case, we devote a team to the refactor project and take our time to make it as solid as possible.

Tools & Processes to Borrow

Q. What tools / processes do you use to make performance a feature of every project?

Ormond: Vox has Justice.js for everybody on product to view the performance impact of what we building, as we are building it and after it’s pushed to production.

Carrico: Vox also to wants to have testing on each branch and notify in GitHub PRs, but we haven’t yet gotten to a point that we can achieve that in a productive manner. The repo shows a really simple example of getting TravisCI performance testing into every PR. Clearly: a very simple one… but something we want to do in a larger fashion.

Ormond: Vox is also looking to extend Tempo with Elastic Search + Kibana for getting more comparative metrics and map those back to our commit log. Mapping commits to performance changes is of high importance, we are considering automated reports that will go out to devs post commit.

Q. What else do you use for performance dashboards?

Ormond: SpeedCurve and Tempo are two synthetic perf tools Vox uses to measure long term perf trends. This is mostly for the for the perf team but all team members can look at our dashboards. We collect a lot of data via Tempo and use Grafana to compile and represent visually. Pretty power interface to perusing data.

Q. What are your pain-points for doing performance testing on Travis?

Carrico: Two things: 1) Having an accurate testing environment that mimics production BEFORE code gets to production. 2) Setting up clear budgets on a legacy code base that reflects the end limit of what is allowed.

Q: Have you built a slackbot for these things yet?

Chilton: Good idea for Vox!!! I would love to see alerts sent out for both big perf gains and losses.

Q. There are more and more tools for measuring page load, what do people use for measuring jank / user experience after the page has load and the scrolling begins?

Carrico: I test jank purely in Chrome’s dev tools. It may not work to show everybody’s experience, but the tools are incredibly deep—and can help find a variety of issues

Ormond: Justice.js has a streaming FPS meter which was designed to give developers feedback on scroll related issues. If you see peaks and valleys during scroll, you may have an issue. Then open up dev tools and find out why.

Carrico: Super critical JS should be inline, but everything else should just be progressive enhancement.

Evangelizing for Performance

Q. How do you evangelize performance to the rest of your team / company?

Carrico: Vox is working on a mixture of things. We are lucky that we have a team that already understands the need for frontend performance, but there are many ways to get a team “on board”. Sharing performance stats, goals, budgets are all great—but generally require an understanding of what things like “Speed Index” actually mean. Something we stole from Etsy / Lara Hogan is to share videos from Web Page Test of our site loading at different speeds and compared to other competitors. Nothing beats having a video that anybody can take a look at to see the exact impact performance can make. We also like to share stats or studies that we find that has the impact of performance included. The most excellent Chris Ruppel and I did a talk on creating a culture of performance, and specific things you can do to everybody in the team (from CXO to developer) to get more buy-in.

Chilton: Surfacing important performance metrics is one of our most important tasks. We want to make those numbers omnipresent and supremely accessible

Museo Digitale: idee a confronto per l'innovazione del web culturale

Nell'ambito della Social Media Week, settimana mondiale di eventi dedicata al Web, alla Tecnologia, alla Innovazione e ai Social Media, in programma Roma dall’8 al 12 giugno 2015 ed in contemporanea con altre 12 città internazionali rappresentanti 5 continenti, il 190 giugno 2015 la Direzione generale Musei del MiBACT e Ales Spa organizzano l’incontro “MUSEO DIGITALE - Idee a Confronto per l'innovazione del web culturale”.

Summer School in Archeologia Aerea e Telerilevamento di prossimità con Sistemi Aeromobili a Pilotaggio Remoto (droni)

Il Dipartimento di Beni Culturali - Laboratorio di “Topografia antica e Fotogrammetria (LabTAF)” dell’Università del Salento, in collaborazione con le Università di Cassino, di Sassari, di Siena, di Ghent (Belgio), di Cambridge (Inghilterra), il CNR IBAM, la società FlyTop e con il sostegno della Soprintendenza Archeologia Lazio e Etruria meridionale e del Comune di Castrocielo (Frosinone), organizza dal 31 agosto al 6 Settembre 2015 ad Aquinum – Castrocielo (Frosinone)l a Summer School: “Archeologia Aerea e Telerilevamento di prossimità con Sistemi Aeromobili a Pilotaggio Remoto (droni)”.